Circonus has always been API-driven, and this has always been one of our product’s core strengths. Via our API, Circonus provides the ability to create anything that you can in the UI and more. With so many of our customers moving to API-driven platforms like AWS, DigitalOcean, Google Compute Engine (GCE), Joyent, Microsoft Azure, and even private clouds, we have seen the emergence of a variety of tools (like Terraform) that allows an abstraction of these resources. Now, with Circonus built into Terraform, it is possible to declaratively codify your application’s monitoring, alerting, and escalation, as well as the resources it runs on.

Terraform is a tool from HashiCorp for building, changing, and versioning infrastructure, which can be used to manage a wide variety of popular and custom service providers. Now, Terraform 0.9 includes an integration for managing Circonus.

These are a few key features of the Circonus Provider in Terraform:

- Monitoring as Code – Alongside Infrastructure as Code. Monitoring (i.e. what to monitor, how to visualize, and when to alert) is described using the same high-level configuration syntax used to describe infrastructure. This allows a blueprint of your datacenter, as well as your business rules, to be versioned and treated as you would any other code. Additionally, monitoring can be shared and reused.

- Execution Plans – Terraform has a “planning” step where it generates an execution plan of what will be monitored, visualized, and alerted on.

- Resource Graphs – Terraform builds a graph of all your resources, and now can include how these resources are monitored, and parallelizes the creation and modification of any non-dependent resources.

- Change Automation – Complex changesets can be applied to your infrastructure and metrics, visualizations, or alerts, which can all be created, deactivated, or deleted with minimal human interaction.

This last piece, Change Automation, is one of the most powerful features of the Circonus Provider in Terraform. Allocations and services can come and go (within a few seconds or a few days), and the monitoring of each resource dynamically updates accordingly.

While our larger customers were already running in programmatically defined and CMDB-driven worlds, our cloud computing customers didn’t share our API-centric view of the world. The lifecycle management of our metrics was either implicit, creating a pile of quickly outdated metrics, or ill-specified, in that they couldn’t make sense of the voluminous data. What was missing was an easy way to implement this level of monitoring across the incredibly complex SOA our customers were using.

Now when organizations ship and deploy an application to the cloud, they can also specify a definition of health (i.e. what a healthy system looks like). This runs side-by-side with the code that specifies the infrastructure supporting the application. For instance, if your application runs in an AWS Auto-Scaling Group and consumes RDS resources, it’s possible to unify the metrics, visualization, and alerting across these different systems using Circonus. Application owners now have a unified deployment framework that can measure itself against internal or external SLAs. With the Circonus Provider in Terraform 0.9, companies running on either public clouds or in private data centers can now programmatically manage their monitoring infrastructure.

As an API-centric service provider, Circonus has always worked with configuration management software. Now, in the era of mutable infrastructure, Terraform extends this API-centric view to provide ubiquitous coverage and consistency across all of the systems that Circonus can monitor. Terraform enables application owners to create a higher-level abstraction of the application, datacenter, and associated services, and present this information back to the rest of the organization in a consistent way. With the Circonus Provider, any Terraform-provisioned resource that can be monitored can be referenced such that there are no blind spots or exceptions. As an API-driven company, we’ve unfortunately seen blind-spots develop, but with Terraform these blind spots are systematically addressed, providing a consistent look, feel, and escalation workflow for application teams and the rest of the organization.

It has been and continues to be an amazing journey to ingest the firehose of data from ephemeral infrastructure, and this is our latest step toward servicing cloud-centric workloads. As industry veterans who remember when microservice architectures were were simply called “SOA,” it is impressive to watch the rate at which new metrics are produced and the dramatic lifespan reduction for network endpoints. At Circonus, our first integration with some of the underlying technologies that enable a modern SOA came at HashiConf 2016. At that time we had nascent integrations with Consul, Nomad, and Vault, but in the intervening months we have added more and more to the product to increase the value customers can get from each of these industry accepted products:

- Consul is the gold standard for service-discovery, and we have recently added a native Consul check-type that makes cluster management of services a snap.

- Nomad is a performant, robust, and datacenter-aware scheduler with native Vault integration.

- Vault can be used to secure, store, and control access to secrets in a SOA.

Each of these products utilizes our circonus-gometrics library. When enabled, Circonus-Gometrics automatically creates numerous checks and automatically enables metrics for all the available telemetry (automatically creating either histogram, text, or numeric metrics, given the telemetry stream). Users can now monitor these tools from a single instance, and have a unified lifecycle management framework for both infrastructure and application monitoring. In particular, how do you address the emergent DevOps pattern of separating the infrastructure management from the running of applications? Enter Terraform. With help from HashiCorp, we began an R&D experiment to investigate the next step and see what was the feasibility of unifying these two axes of organizational responsibility. Here are some of the things that we’ve done over the last several months:

These features and many more, the fruit of expert insights, are what we’ve built into the product, and more will be rolled out in the coming months.

Example of a Circonus Cluster definition:

variable "consul_tags" {

type = "list"

default = [ "app:consul", "source:consul" ]

}

resource "circonus_metric_cluster" "catalog-service-query-tags" {

name = "Aggregate Consul Catalog Queries for Service Tags"

description = "Aggregate catalog queries for Consul service tags on all consul servers"

query {

definition = "consul`consul`catalog`service`query-tag`*"

type = "average"

}

tags = ["${var.consul_tags}", "subsystem:catalog"]

}

Then merge these into a histogram:

resource "circonus_check" "query-tags" {

name = "Consul Catalog Query Tags (Merged Histogram)"

period = "60s"

collector {

id = "/broker/1490"

}

caql {

query = <<EOF

search:metric:histogram("consul`consul`catalog`service`query-tag (active:1)") | histogram:merge()

EOF

}

metric {

name = "output[1]"

tags = ["${var.consul_tags}", "subsystem:catalog"]

type = "histogram"

unit = "nanoseconds"

}

tags = ["${var.consul_tags}", "subsystem:catalog"]

}

Then add the 99th Percentile:

resource "circonus_check" "query-tag-99" {

name = "Consul Query Tag 99th Percentile"

period = "60s"

collector {

id = "/broker/1490"

}

caql {

query = <<EOF

search:metric:histogram("consul`consul`http`GET`v1`kv`_ (active:1)") | histogram:merge() | histogram:percentile(99)

EOF

}

metric {

name = "output[1]"

tags = ["${var.consul_tags}", "subsystem:catalog"]

type = "histogram"

unit = "nanoseconds"

}

tags = ["${var.consul_tags}", "subsystem:catalog"]

}

And add a Graph:

resource "circonus_graph" "query-tag" {

name = "Consul Query Tag Overview"

description = "The per second histogram of all Consul Query tags metrics (with 99th %tile)"

line_style = "stepped"

metric {

check = "${circonus_check.query-tags.check_id}"

metric_name = "output[1]"

metric_type = "histogram"

axis = "left"

color = "#33aa33"

name = "Query Latency"

}

metric {

check = "${circonus_check.query-tag-99.check_id}"

metric_name = "output[1]"

metric_type = "histogram"

axis = "left"

color = "#caac00"

name = "TP99 Query Latency"

}

tags = ["${var.consul_tags}", "owner:team1"]

}

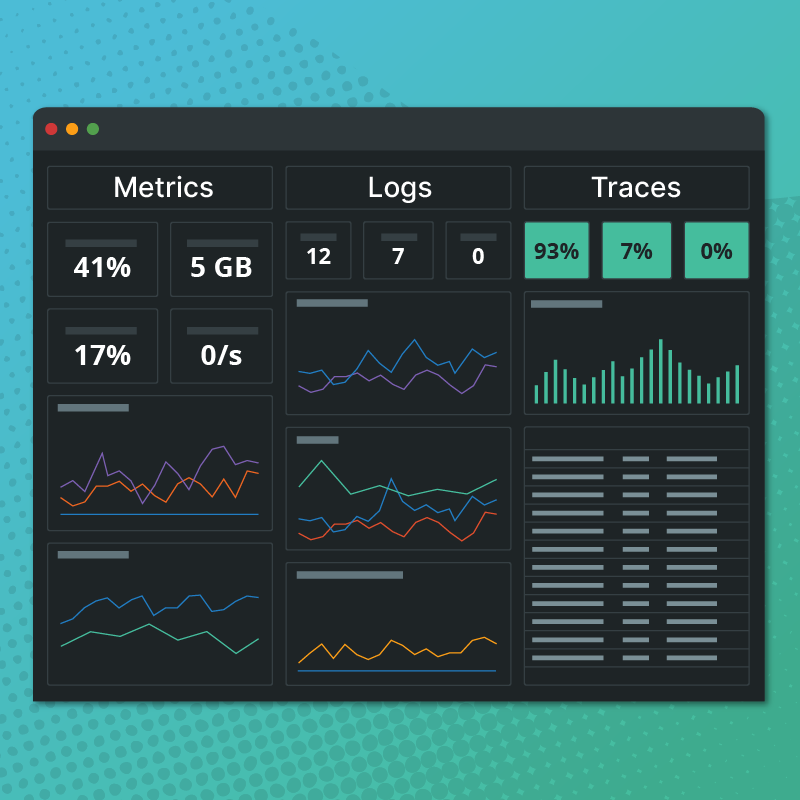

And you get this result:

Finally, we want to be alerted if the 99th Percentile goes above 8000ms. So, we’ll create the contact (along with SMS, we can use Slack, OpsGenie, PagerDuty, VictorOps, or email):

resource "circonus_contact_group" "consul-owners-escalation" {

name = "Consul Owners Notification"

sms {

user = "${var.alert_sms_user_name}"

}

email {

address = "[email protected]"

}

tags = [ "${var.consul_tags}", "owner:team1" ]

}

And then define the rule:

resource "circonus_rule_set" "99th-threshhold" {

check = "${circonus_check.query-tag-99.check_id}"

metric_name = "output[1]"

notes = <<EOF

Query latency is high, take corrective action.

EOF

link = "https://www.example.com/wiki/consul-latency-playbook"

if {

value {

max_value = "8000" # ms

}

then {

notify = [

"${circonus_contact_group.consul-owners-escalation.id}",

]

severity = 1

}

}

tags = ["${var.consul_tags}", "owner:team1"]

}

With a little copy and paste, we can do exactly the same for all the other metrics in the system.

Note that the original metric was automatically created when consul was deployed, and you can do the same thing with any number of other numeric data points, or do the same with native histogram data (merge all the histograms into a combined histogram and apply analytics across all your consul nodes).

We also have the beginnings of a sample set of implementations here, which builds on the sample Consul, Nomad, & Vault telemetry integration here.