Here at Circonus, we have a long heritage of open source software involvement. So when we saw that Istio provided a well designed interface to syndicate service telemetry via adapters, we knew that a Circonus adapter would be a natural fit. Istio has been designed to provide a highly performant, highly scalable application control plane, and Circonus has been designed with performance and scalability as core principles.

Today we are happy to announce the availability of the Circonus adapter for the Istio service mesh. This blog post will go over the development of this adapter, and show you how to get up and running with it quickly. We know you’ll be excited about this, because Kubernetes and Istio give you the ability to scale to the level that Circonus was engineered to perform at, above other telemetry solutions.

If you don’t know what a service mesh is, you aren’t alone, but odds are you have been using them for years. The routing infrastructure of the Internet is a service mesh; it facilitates tcp retransmission, access control, dynamic routing, traffic shaping, etc. The monolithic applications that have dominated the web are giving way to applications composed of microservices. Istio provides control plane functionality for container based distributed applications via a sidecar proxy. It provides the service operator with a rich set of functionality to control a Kubernetes orchestrated set of services, without requiring the services themselves to implement any control plane feature sets.

Istio’s Mixer provides an adapter model which allowed us to develop an adapter by creating handlers for interfacing Mixer with external infrastructure backends. Mixer also provides a set of templates, each of which expose different sets of metadata that can be provided to the adapter. In the case of a metrics adapter such as the Circonus adapter, this metadata includes metrics like request duration, request count, request payload size, and response payload size. To activate the Circonus adapter in an Istio-enabled Kubernetes cluster, simply use the istioctl command to inject the Circonus operator configuration into the K8s cluster, and the metrics will start flowing.

Here’s an architectural overview of how Mixer interacts with these external backends:

Istio also contains metrics adapters for StatsD and Prometheus. However, a few things differentiate the Circonus adapter from those other adapters. First, the Circonus adapter allows us to collect the request durations as a histogram, instead of just recording fixed percentiles. This allows us to calculate any quantile over arbitrary time windows, and perform statistical analyses on the histogram which is collected. Second, data can be retained essentially forever. Third, the telemetry data is retained in a durable environment, outside the blast radius of any of the ephemeral assets managed by Kubernetes.

Let’s take a look at the guts of how data gets from Istio into Circonus. Istio’s adapter framework exposes a number of methods which are available to adapter developers. The HandleMetric() method is called for a set of metric instances generated from each request that Istio handles. In our operator configuration, we can specify the metrics that we want to act on, and their types:

spec:

# HTTPTrap url, replace this with your account submission url

submission_url: "https://trap.noit.circonus.net/module/httptrap/myuuid/mysecret"

submission_interval: "10s"

metrics:

- name: requestcount.metric.istio-system

type: COUNTER

- name: requestduration.metric.istio-system

type: DISTRIBUTION

- name: requestsize.metric.istio-system

type: GAUGE

- name: responsesize.metric.istio-system

type: GAUGE

Here we configure the Circonus handler with a submission URL for an HTTPTrap check, an interval to send metrics at. In this example, we specify four metrics to gather, and their types. Notice that we collect the requestduration metric as a DISTRIBUTION type, which will be processed as a histogram in Circonus. This retains fidelity over time, as opposed to averaging that metric, or calculating a percentile before recording the value (both of those techniques lose the value of the signal).

For each request, the HandleMetric() method is called on each request for the metrics we have specified. Let’s take a look at the code:

// HandleMetric submits metrics to Circonus via circonus-gometrics

func (h *handler) HandleMetric(ctx context.Context, insts []*metric.Instance) error {

for _, inst := range insts {

metricName := inst.Name

metricType := h.metrics[metricName]

switch metricType {

case config.GAUGE:

value, _ := inst.Value.(int64)

h.cm.Gauge(metricName, value)

case config.COUNTER:

h.cm.Increment(metricName)

case config.DISTRIBUTION:

value, _ := inst.Value.(time.Duration)

h.cm.Timing(metricName, float64(value))

}

}

return nil

}

Here we can see that HandleMetric() is called with a Mixer context, and a set of metric instances. We iterate over each instance, determine its type, and call the appropriate circonus-gometrics method. The metric handler contains a circonus-gometrics object which makes submitting the actual metric trivial to implement in this framework. Setting up the handler is a bit more complex, but still not rocket science:

// Build constructs a circonus-gometrics instance and sets up the handler

func (b *builder) Build(ctx context.Context, env adapter.Env) (adapter.Handler, error) {

bridge := &logToEnvLogger{env: env}

cmc := &cgm.Config{

CheckManager: checkmgr.Config{

Check: checkmgr.CheckConfig{

SubmissionURL: b.adpCfg.SubmissionUrl,

},

},

Log: log.New(bridge, "", 0),

Debug: true, // enable [DEBUG] level logging for env.Logger

Interval: "0s", // flush via ScheduleDaemon based ticker

}

cm, err := cgm.NewCirconusMetrics(cmc)

if err != nil {

err = env.Logger().Errorf("Could not create NewCirconusMetrics: %v", err)

return nil, err

}

// create a context with cancel based on the istio context

adapterContext, adapterCancel := context.WithCancel(ctx)

env.ScheduleDaemon(

func() {

ticker := time.NewTicker(b.adpCfg.SubmissionInterval)

for {

select {

case <-ticker.C:

cm.Flush()

case <-adapterContext.Done()

ticker.Stop()

cm.Flush()

return

}

}

})

metrics := make(map[string])config.Params_MetricInfo_Type)

ac := b.adpCfg

for _, adpMetric := range ac.Metrics {

metrics[adpMetricName] = adpmetric.Type

}

return &handler{cm: cm, env: env, metrics: metrics, cancel: adapterCancel}, nil

}

Mixer provides a builder type which we defined the Build method on. Again, a Mixer context is passed, along with an environment object representing Mixer’s configuration. We create a new circonus-gometrics object, and deliberately disable automatic metrics flushing. We do this because Mixer requires us to wrap all goroutines in their panic handler with the env.ScheduleDaemon() method. You’ll notice that we’ve created our own adapterContext via context.WithCancel. This allows us to shut down the metrics flushing goroutine by calling h.cancel() in the Close() method handler provided by Mixer. We also want to send any log events from CGM (circonus-gometrics) to Mixer’s log. Mixer provides an env.Logger() interface which is based on glog, but CGM uses the standard Golang logger. How did we resolve this impedance mismatch? With a logger bridge, any logging statements that CGM generates are passed to Mixer.

// logToEnvLogger converts CGM log package writes to env.Logger()

func (b logToEnvLogger) Write(msg []byte) (int, error) {

if bytes.HasPrefix(msg, []byte("[ERROR]")) {

b.env.Logger().Errorf(string(msg))

} else if bytes.HasPrefix(msg, []byte("[WARN]")) {

b.env.Logger().Warningf(string(msg))

} else if bytes.HasPrefix(msg, []byte("[DEBUG]")) {

b.env.Logger().Infof(string(msg))

} else {

b.env.Logger().Infof(string(msg))

}

return len(msg), nil

}

For the full adapter codebase, see the Istio github repo here.

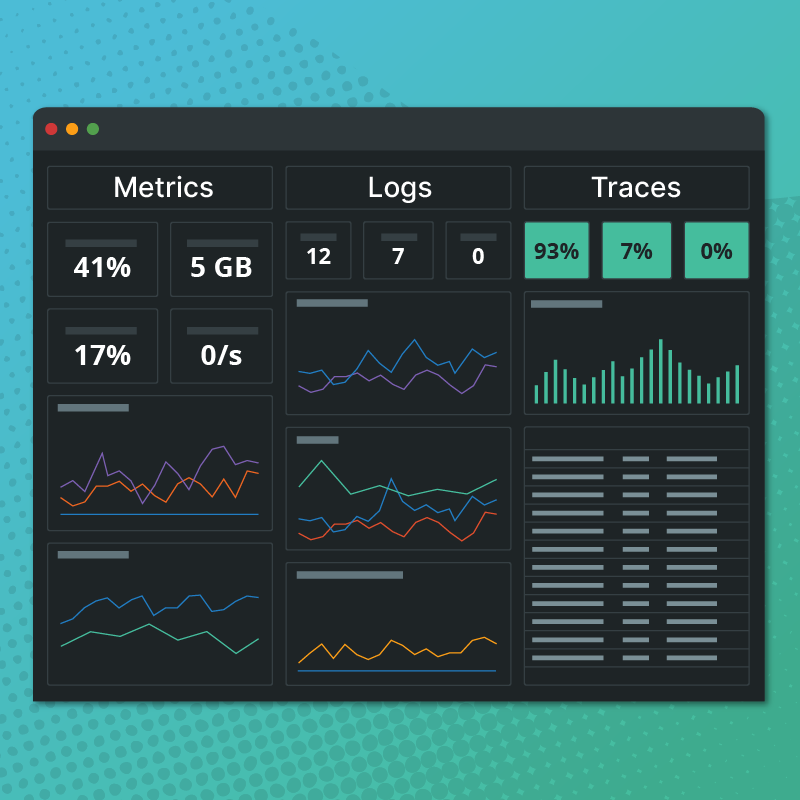

Enough with the theory though, let’s see what this thing looks like in action. I setup a Google Kubernetes Engine deployment, loaded a development version of Istio with the Circonus adapter, and deployed the sample BookInfo application that is provided with Istio. The image below is a heatmap of the distribution of request durations from requests made to the application. You’ll notice the histogram overlay for the time slice highlighted. I added an overlay showing the median, 90th, and 95th percentile response times; it’s possible to generate these at arbitrary quantiles and time windows because we store the data natively as log linear histograms. Notice that the median and 90th percentile are relatively fixed, while the 95th percentile tends to fluctuate over a range of a few hundred milliseconds. This type of deep observability can be used to quantify the performance of Istio itself over versions as it continues it’s rapid growth. Or, more likely, it will be used to identify issues within the application deployed. If your 95th percentile isn’t meeting your internal Service Level Objectives (SLO), that’s a good sign you have some work to do. After all, if 1 in 20 users is having a sub-par experience on your application, don’t you want to know about it?

That looks like fun, so let’s lay out how to get this stuff up and running. First thing we’ll need is a Kubernetes cluster. Google Kubernetes Engine provides an easy way to get a cluster up quickly.

There’s a few other ways documented in the Istio docs if you don’t want to use GKE, but these are the notes I used to get up and running. I used the gcloud command line utility as such after deploying the cluster in the web UI.

# set your zones and region $ gcloud config set compute/zone us-west1-a $ gcloud config set compute/region us-west1 # create the cluster $ gcloud alpha container cluster create istio-testing --num-nodes=4 # get the credentials and put them in kubeconfig $ gcloud container clusters get-credentials istio-testing --zone us-west1-a --project istio-circonus # grant cluster admin permissions $ kubectl create clusterrolebinding cluster-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value core/account)

Poof, you have a Kubernetes cluster. Let’s install Istio – refer to the Istio docs

# grab Istio and setup your PATH $ curl -L https://git.io/getLatestIstio | sh - $ cd istio-0.2.12 $ export PATH=$PWD/bin:$PATH # now install Istio $ kubectl apply -f install/kubernetes/istio.yaml # wait for the services to come up $ kubectl get svc -n istio-system

Now setup the sample BookInfo application

# Assuming you are using manual sidecar injection, use `kube-inject` $ kubectl apply -f <(istioctl kube-inject -f samples/bookinfo/kube/bookinfo.yaml) # wait for the services to come up $ kubectl get services

If you are on GKE, you’ll need to setup the gateway and firewall rules

# get the worker address

$ kubectl get ingress -o wide

# get the gateway url

$ export GATEWAY_URL=<workerNodeAddress>:$(kubectl get svc istio-ingress -n istio-system -o jsonpath='{.spec.ports[0].nodePort}')

# add the firewall rule

$ gcloud compute firewall-rules create allow-book --allow tcp:$(kubectl get svc istio-ingress -n istio-system -o jsonpath='{.spec.ports[0].nodePort}')

# hit the url - for some reason GATEWAY_URL is on an ephemeral port, use port 80 instead

$ wget http://<workerNodeAddress>/<productpage>

The sample application should be up and running. If you are using Istio 0.3 or less, you’ll need to install the docker image we build with the Circonus adapter embedded.

Load the Circonus resource definition (only need to do this with Istio 0.3 or less). Save this content as circonus_crd.yaml

kind: CustomResourceDefinition

apiVersion: apiextensions.k8s.io/v1beta1

metadata:

name: circonuses.config.istio.io

labels:

package: circonus

istio: mixer-adapter

spec:

group: config.istio.io

names:

kind: circonus

plural: circonuses

singular: circonus

scope: Namespaced

version: v1alpha2

Now apply it:

$ kubectl apply -f circonus_crd.yaml

Edit the Istio deployment to pull in the Docker image with the Circonus adapter build (again, not needed if you’re using Istio v0.4 or greater)

$ kubectl -n istio-system edit deployment istio-mixer

Change the image for the Mixer binary to use the istio-circonus image:

image: gcr.io/istio-circonus/mixer_debug imagePullPolicy: IfNotPresent name: mixer

Ok, we’re almost there. Grab a copy of the operator configuration, and insert your HTTPTrap submission URL into it. You’ll need a Circonus account to get that; just signup for a free account if you don’t have one and create an HTTPTrap check.

Now apply your operator configuration:

$ istioctl create -f circonus_config.yaml

Make a few requests to the application, and you should see the metrics flowing into your Circonus dashboard! If you run into any problems, feel free to contact us at the Circonus labs slack, or reach out to me directly on Twitter at @phredmoyer.

This was a fun integration; Istio is definitely on the leading edge of Kubernetes, but it has matured significantly over the past few months and should be considered ready to use to deploy new microservices. I’d like to extend thanks to some folks who helped out on this effort. Matt Maier is the maintainer of Circonus gometrics and was invaluable on integrating CGM within the Istio handler framework. Zack Butcher and Douglas Reid are hackers on the Istio project, and a few months ago gave an encouraging ‘send the PRs!’ nudge when I talked to them at a local meetup about Istio. Martin Taillefer gave great feedback and advice during the final stages of the Circonus adapter development. Shriram Rajagopalan gave a hand with the CircleCI testing to get things over the finish line. Finally, a huge thanks to the team at Circonus for sponsoring this work, and the Istio community for their welcoming culture that makes this type of innovation possible.