This post is Part 1 of a 2-part series about Monitoring Ephemeral Infrastructure. Part 2 will detail how Circonus uses these features to monitor our API services backed by ephemeral GCP infrastructure.

Infrastructure management has changed. Gone are the days when managing server operations also meant managing server hardware and its never-ending upgrade cycle. Thanks to containers and cloud virtualization, increased server capacity is just a button click away. And what about auto-scaling? New hosts may be brought online without you even needing to click anything. But this shift has brought with it new challenges: servers can be very ephemeral, existing for mere minutes before being destroyed, and monitoring tools have struggled to keep up. The old workflow of manually setting up monitoring and other tooling simply isn’t scalable in this new paradigm.

Here at Circonus, we’ve been making improvements to our capabilities to support the monitoring of ephemeral infrastructure. All parts of our system have experienced significant changes. From data collection and storage to alerting and visualization, we’ve rolled out many new features over the past two years which all work together to support monitoring in an ephemeral environment. Let’s dive in and see how Circonus supports monitoring ephemeral infrastructure.

Tags & Search

Circonus now supports the new Metrics 2.0 standard (an emerging set of conventions, standards and concepts around time series metric metadata), including tags at the metric stream level, enabling users to label metrics with metadata so that advanced platform capabilities can work with them. These stream tags are formatted with a special notation on the end of the metric name, and are very flexible (see the tags documentation for more details). In the Circonus interface, stream tags are displayed in a special format wherever the metric name is shown:

You can click on any stream tag in the interface to be taken to the Metrics Explorer and immediately start a new search based on that tag; then while at the Metrics Explorer, you can click any stream tag on the page to add that tag to the current search and narrow your search results. This new metric search works with our new “just send data” capabilities to make all new metrics immediately searchable, and even old inactive metrics can still be found easily if you expand the search date range to back when the old metrics were active. Well-tagged metrics are easy to find using this new metric search, which is even integrated into graphs and our new dynamic alerting. For more in-depth info about metric stream tags, see our stream tags blog post.

Just Send Data

Historically, Circonus users had to manually pre-select every metric stream that they wanted to accept into Circonus, but this is no longer the case. Our integrations are quite versatile and accept data in a variety of formats, so now you can “just send data” to Circonus and have it automatically accepted or rejected based on your filters. Our new Circonus Cloud Agent can easily pull data out of Amazon AWS, Azure, or Google Cloud and Circonus will automatically collect the data you send…no further configuration needed.

By default, when a new Cloud Agent check is setup, it will accept all new metrics automatically. On the last step of the config form, you’ll see the Allow/Deny Filters:

With no filters, the “Allow all unmatched” will allow all metrics to be automatically collected. When you spin up new servers, just send their metrics to this check and they’ll be collected along with your other metrics. When you deactivate metrics, simply stop sending them and they will automatically deactivate. Our new activity tracking capabilities allow us to track the metrics you use and how long they’re active, so you don’t have to worry about old inactive metrics blocking new metrics from being collected. (And best of all, there is no limit to the number of metrics we can collect in this way.)

If you want to narrow down the set of metrics that are collected, you can always change your Allow/Deny Filters at any time in the future. To change these filters, you need to edit the appropriate check—go to the check details page and choose Edit Check from the menu. Then, on the last step of the config form, you’ll once again see the list of Allow/Deny Filters. When you setup your filters, start with general, broadly-applicable rules and use more narrowly-defined rules last. The filters are very flexible, so you can take either a blacklisted approach or a whitelisted approach. By default, the “allow all unmatched” checkbox is checked, and you can add one or more “deny” rules to blacklist certain metrics. Conversely, you can uncheck the “allow all” checkbox and then add one or more “allow” rules to whitelist certain metrics and only collect the metrics matched by your filters. Here’s an example of a whitelist approach, where only the metrics matched by the two filters are collected:

One key benefit of this approach to metric governance is that we don’t dictate which metrics are important to you. Many monitoring services choose your metrics for you, but we believe that you know your business better than we do, and we want to empower you to collect whichever metrics provide the most value to you. We are always working on better, easier ways to give you centralized control over which metrics you collect, so very soon these Allow/Deny Filters will also support tag queries in addition to the current approach of using regular expressions. This new tag query support will make it easy to automatically filter your incoming metrics based on how you have tagged them, so watch for that feature to launch soon.

Dynamic Graphs

Graphs now support automatic population via our new metric search capabilities. We have added a new datapoint type which dynamically applies a search query at render-time to create always up-to-date views of dynamically scaling architecture and services, without needing continual re-configuration. When you’re editing a graph, select Add Datapoint from the page menu, then click the highlighted Metrics Search button in the modal:

(This is now the preferred method of populating graphs and we will be transitioning all metric datapoints to be dynamic in the near future.) Once you’ve added the Metrics Search datapoint to the graph, you can go down to the datapoints list below the graph and expand it to view all the available options.

The main search field uses the same syntax as the Metrics Explorer search, and will return all types of metrics (text, numeric and histogram). By default, the Metric Type selector (below the search field) is set to “auto” which means all three types of metrics will be rendered. If you want to filter the results or if you know the type you’re searching for, you can explicitly set the type using the Metric Type selector. This has two effects:

- It will filter out any metrics not of the specified type, i.e. if you select the numeric type, then even if histogram metrics are part of the search result set, they will be discarded and not rendered.

- In the case of numeric and histogram metrics, you will get additional config fields pertaining to that type.

When you select the histogram type you will get a Transform field which allows you to apply a histogram-only transform to each metric datapoint rendered from that search. This lets you easily calculate and render quantiles or inverse quantiles from histogram metrics, for example.

When you select the numeric type you will get several new fields. You will get a Transform field which allows you to apply a numeric-only transform to each datapoint, and this lets you easily apply anomaly detection or predictive algorithms to your numeric metrics. You will also get an Aggregate field. This will take all the metrics returned from the search and instead of rendering one metric per line on the graph, it will aggregate all the metrics into a single line via the chosen method (min, max, sum or mean).

These Transform and Aggregate fields really boost the power of dynamic graphs, allowing you to use powerful analytical methods in your graphs without needing to constantly update your graphs each time your infrastructure changes.

Dynamic Alerting

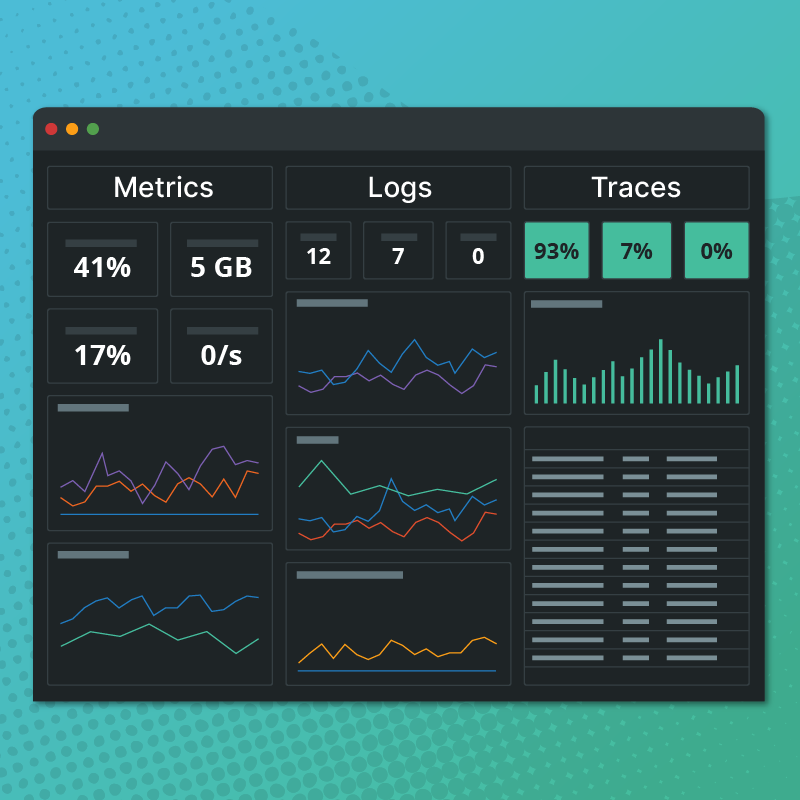

The area where we’ve most recently made significant improvements is our new dynamic alerting capabilities. Our alerting engine can now dynamically apply filter criteria to each metric stream value as it’s collected, to easily support your changing architecture and services with minimal ongoing maintenance.

We currently support both old metric-based alerting and new dynamic alerting (based on Regular Expression patterns) side-by-side. As you can see above, metric-based rulesets are marked with “M” badges, whereas pattern-based rulesets are marked with “P” badges.

To get started, click the New button in the upper right to open the New Ruleset modal. In this modal you can still use the Single Metric tab to add a metric-based ruleset, but under the Metric Pattern tab is where you will be able to use the full power of our new dynamic alerting.

The pattern field supports Perl-Compatible Regular Expressions, and the tag filter field supports a search query using our tag query language which you may already be familiar with, since it’s also used in the Metrics Explorer search syntax (see our tag query language documentation for more details). The pattern and tag filter are positive matches—any incoming metric name which matches both of them will be evaluated according to the attached rules. After entering a pattern and/or tag filter, be sure to select the applicable metric type (either text or numeric), since once this is chosen it cannot be changed.

Perhaps the most powerful part of these dynamic rulesets is that you have the option of applying a ruleset across all checks in your account. If you look below the pattern and tag filter fields you will see a checkbox and a tree-style browsing pane. Use the tree-style browser to select a particular check if you only want the new ruleset to apply to metrics in that check. If you want the ruleset to apply to every metric on the account, simply check the “All Checks” checkbox. Last, I highly recommend double-checking your fields before you click Create, because once a pattern-based ruleset is created, its pattern and tag filter cannot be changed.

How Do I Get Started?

These features are all available on Circonus today, and we believe the best way to learn something is to try it out for yourself. However, we recognize that working with auto-scaling, ephemeral infrastructure is a paradigm shift in how you think about management and monitoring, and may require adjusting your approach.

To illustrate this, in Part 2 of this series we’ll walk through how these new features can be applied to a real-world scenario. Here at Circonus, we monitor our own API services which are currently hosted on ephemeral infrastructure within Google Cloud, and we will go into detail about how we set up this monitoring using our new dynamic data features.